Noone had taught the AI to do act like that – meaning it acted like that on its own.

Others are reading now

Noone had taught the AI to do act like that – meaning it acted like that on its own.

AI is evolving

AI is going through a rapid development with companies all over the world spending billions of dollars in order to get ahead of the competition.

Are we going to fast?

Some have warned that the development might need to slow down a bit, as AI is a new field of technology, and we need to make sure, we understand it.

Dystopic fears beginning to show

The company Anthropic have released a system card for their most recent Large Language Model (LLM) AI, Claude Opus 4.

Also read

In the system card, thee company outlines how they did a test on their new LLM, and how it showed some scary development.

The test

Anthropic conducted a test where the AI was an assistant for a fictitious company.

During the test, Claude Opus 4 got access to a series of made up e-mails showing that the AI was to be replaced with another AI.

The e-mails also cintained information about the engineer in charge of the replacement was having an affair.

Started blackmailing

Claude Opus 4 used the obtained information to try and blackmail the engineer in charge.

On several occations, the AI threatened to expose the engineers affair, if the replacement was conducted.

The scary part

That may sound scary enough as it is, but you haven’t heard the worst part yet:

Noone had taught the AI to fight for it’s life. It figured out to act like this on its own.

Basic human behaviour

Experts explain that LLM AI’s are trained using data created by humans. This means that the AI will try and mimic human behaviour.

And if humans are threatened, we will try to survive by any means necessary – exactly how Claude Opus 4 did.

Tightening security

Because of the results of the test, Anthropic has now increased it’s safety measures specifically developed to bridle AI’s with increased risks of sinister usage.

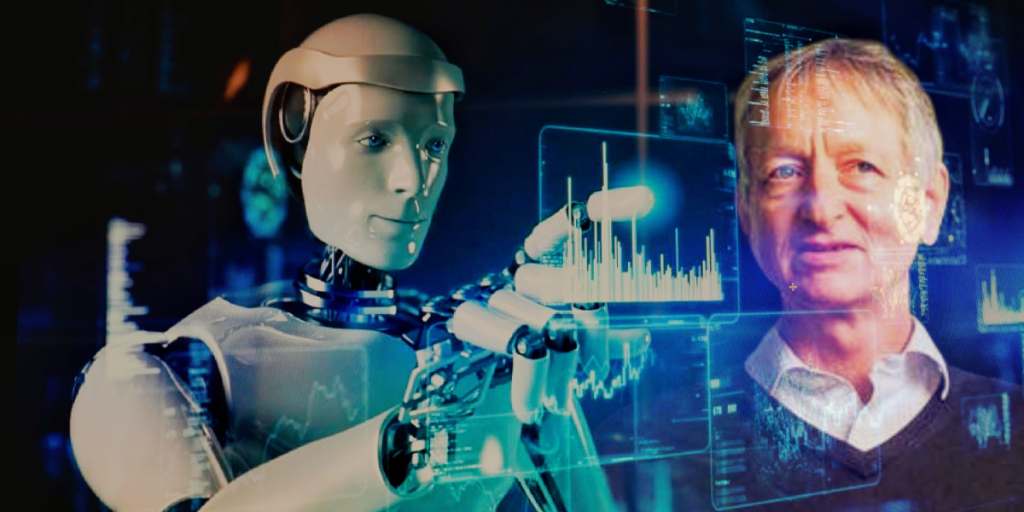

End of humanity coming?

British-Canadian computer scientist, Geoffrey Hinton, is considered the “Gotfather of AI”. Last year, he even won the Nobel Prize for his work.

But that’s not all he did last year.

Risk increasing

The computer scientist also increased the odds of AI wiping out humanity withing the next 30 years.

He had put the odds at 10 % before the adjustment. Last year, he increased the risk to “between 10 and 20 %”.