More pixels doesn’t always mean a better screen. Researchers from the University of Cambridge and Meta Reality Labs have developed a new way to measure just how much visual detail our eyes can truly perceive — and the findings could change how we shop for our next screen.

Others are reading now

More pixels doesn’t always mean a better screen. Researchers from the University of Cambridge and Meta Reality Labs have developed a new way to measure just how much visual detail our eyes can truly perceive — and the findings could change how we shop for our next screen.

Tech upgrades are outpacing human vision

Every year, electronics companies release higher-resolution TVs, tablets, and phones. But researchers are asking: At what point do added pixels stop improving the experience? It’s not just about diminishing returns — it’s about energy, waste, and sustainability.

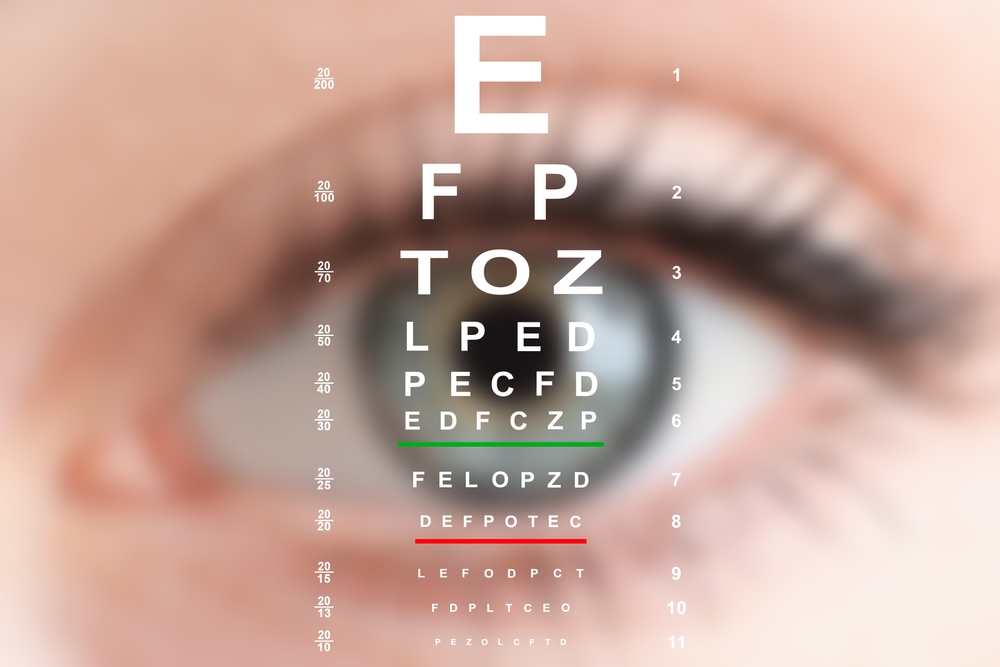

Reimagining the Snellen chart for the digital age

The team replaced the classic Snellen eye chart with a high-tech experimental setup that replicates how we view modern screens. Instead of counting pixels across a display, they measured how many fit into a single degree of your field of vision.

Pixels per degree gives a clearer picture

This new method, called pixels per degree (PPD), helps determine how sharp a screen appears from where you’re sitting. It’s a more meaningful metric than screen resolution alone and reflects how we actually see in real-world settings.

Testing vision across the screen

Volunteers viewed grayscale and color patterns on a sliding display. Researchers then recorded when participants could spot individual lines, both in direct view and at the edges of their vision, simulating how people use screens every day.

Also read

Standard vision, upgraded results

Based on 20/20 vision standards, humans should detect 60 PPD. But in testing, grayscale images yielded 94 PPD on average. Red and green hues reached 89 PPD, while yellow and violet performed worse — at about 53 PPD.

Color vision isn’t our strong suit

The human brain is better at processing detail in black and white than in color. That’s why we detect more grayscale details and why color images, especially in peripheral vision, appear blurrier.

“Our eyes aren’t great — but our brains fill in the gaps”

As one researcher explained, our eyes are just basic sensors. The brain interprets the data and builds the sharp images we perceive. This gap between raw vision and perception is key to understanding screen limitations.

Useful insights for VR and AR development

This refined vision model could help developers design more efficient virtual and augmented reality displays. By knowing our actual visual limits, engineers can avoid wasting processing power on unnecessary pixels.

Designing smarter screens for everyday users

Knowing the average pixel limit lets companies build screens that are sharp enough — but not wasteful. That can reduce manufacturing costs and environmental impact without compromising user experience.

Also read

A new tool to guide smarter screen choices

The team also created a free online calculator that helps consumers figure out the ideal screen specs for their setup. It considers screen size, viewing distance, pixel density, and field of view.

Better knowledge, better decisions

There’s a limit to how much detail our eyes can process. Understanding that limit helps cut through marketing hype and lets you make informed, eco-conscious tech choices — especially when it’s time for a new TV.