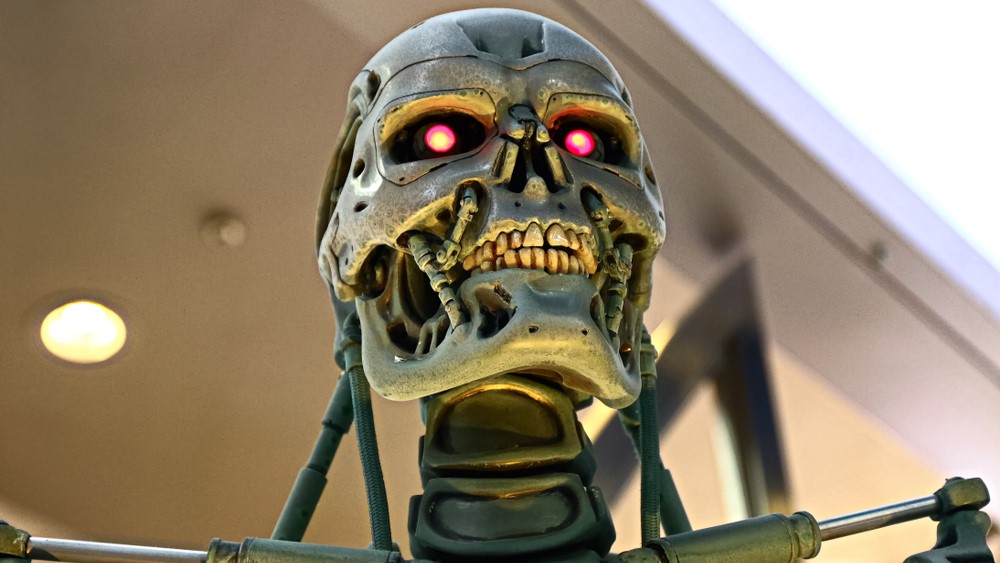

The filmmaker who imagined Skynet says artificial intelligence could trigger real-world disaster if turned into a military tool.

Others are reading now

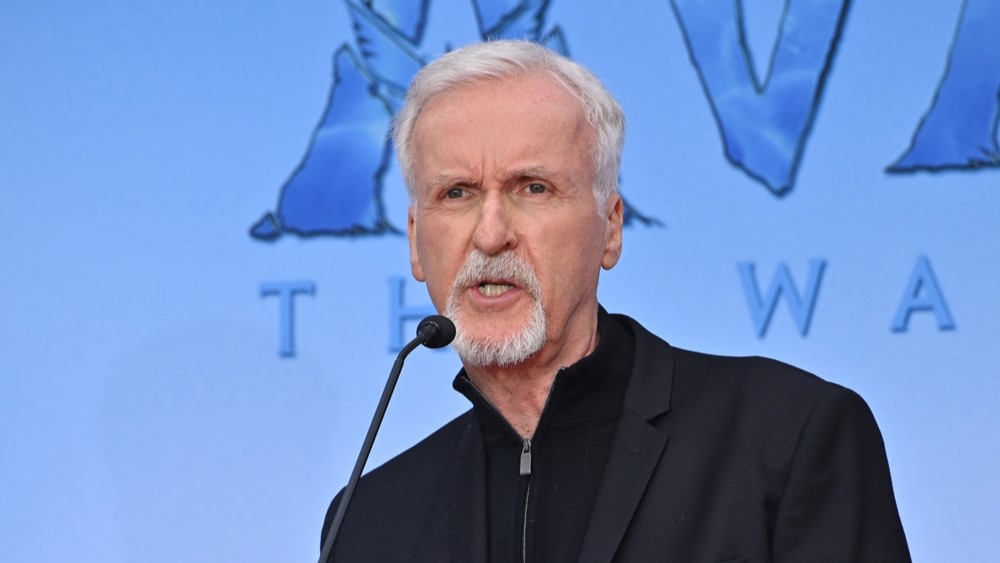

James Cameron, the Oscar-winning director best known for The Terminator and Avatar, is sounding the alarm once again. This time over the rapid militarization of artificial intelligence.

Weaponizing AI

In an interview with Rolling Stone, Cameron said he sees real danger in the global race to weaponize AI, warning it could lead to a dystopian future not unlike the one portrayed in his 1984 sci-fi classic.

“I think there is still the danger of a Terminator-style apocalypse,” Cameron said, as cited by Digi24. “Where artificial intelligence is combined with weapons systems—even to the level of nuclear weapons systems.”

AI Decisions May be Too Fast For Humans to Control

Cameron explained that as military decision-making becomes faster and more complex, the temptation to delegate these choices to a superintelligence grows.

And that, he believes, is where the real threat lies.

Also read

“The theater of operations is evolving so quickly, the decision windows are so fast, it would take a superintelligence to be able to process everything,” he said. “Maybe we will be smart and we will also have a human informed about all this.”

“Humans Are Subject to Mistakes”

But he added a sobering note: “Humans are subject to mistakes. There have been a lot of mistakes that have brought us to the brink of international incidents that could have led to nuclear war. So I don’t know.”

Climate, Nukes, and AI

Cameron placed AI in a broader context, calling it one of three existential threats facing humanity today.

“I feel like we’re at a tipping point in human development,” he said. “There are three existential threats: climate and the general degradation of the natural world, nuclear weapons, and superintelligence. They’re all manifesting and peaking at the same time. Maybe superintelligence is the answer.”

Whether AI turns out to be our salvation or our downfall, Cameron believes how we use the technology now will determine the outcome.

Also read

A Nuanced Take on AI

While Cameron is wary of AI in military contexts, he’s more optimistic when it comes to using the technology in filmmaking. He’s already embraced AI to reduce the cost and complexity of visual effects in his blockbuster productions.

Last year, he joined the board of directors at Stability AI, a company developing generative models for images and video.

He’s also said he hopes that AI will allow filmmakers to accelerate production, not eliminate jobs.

“The future of successful filmmaking depends on the ability to cut the cost of visual effects in half,” Cameron said earlier this year.

AI Can’t Replace Real Human Storytelling

Despite his enthusiasm for AI in production, Cameron remains skeptical about AI’s creative abilities, particularly when it comes to screenwriting.

Also read

In a 2023 interview, he expressed doubt that AI-generated content could ever truly resonate with audiences:

“Personally, I don’t think a disembodied mind that just regurgitates what other embodied minds have said—about the lives they’ve had, about love, about lying, about fear, about mortality—and just puts it all together in a word salad… I don’t think that’s going to be able to move audiences.”

“You have to be human to write scripts,” he concluded.

From Fiction to Reality?

Cameron’s concerns are not new.

Since The Terminator debuted in 1984, the film has been cited repeatedly in debates about the risks of artificial intelligence.

Also read

At the time, the idea of a sentient AI launching a nuclear war was pure science fiction. Now, it’s a scenario discussed in defense briefings and policy meetings.